AI is regarded as the next big thing—so big that future technologies will be dependent on it. But then, do we really know what we are getting ourselves into? Here are ten scary facts about artificial intelligence.

10 Your Self-Driving Car Might Be Programmed To Kill You

Let’s assume you’re driving down a road. Then, a group of children suddenly appear in front of your car. You hit the brakes, but they don’t work. Now, you have two options: The first is to run over the children and save your life. The second is to swerve into a nearby wall or bollard, thus saving the children but killing yourself. Which would you pick? Most people agree they will swerve into the bollard and kill themselves. Now imagine that your car is self-driving, and you’re the passenger. Would you still want it to swerve into the bollard and kill you? Most people who agreed they would swerve into the bollard if they were the driver also agreed that they would not want their self-driving car to swerve into the bollard and kill them. In fact, they won’t even buy such car if they knew it would deliberately put them at risk in an accident. This takes us to another question: What would the cars do? The cars will do what they were programmed to do. As things are, makers of self-driving cars aren’t talking. Most, like Apple, Ford, and Mercedes-Benz, tactfully dodge the question at every instance. An executive of Daimler AG (the parent company of Mercedes-Benz) once stated that their self driving cars would “protect [the] passenger at all costs.” However, Mercedes-Benz refuted this, stating that their vehicles are built to ensure that such a dilemma never happens. That is ambiguous because we all know that such situations will happen. Google came clean on this and said its self-driving cars would avoid hitting unprotected road users and moving things. This means the car would hit the bollard and kill the driver. Google further clarifies that in the event of an impending accident, its self-driving cars would hit the smaller of any two vehicles. In fact, Google self-driving cars might be seeking to be closer to smaller objects at all times. Google currently has a patent on a technology that makes its self-driving cars move away from bigger cars and toward smaller cars while on the road.[1]

9 Robots Might Demand Rights Just Like Humans

With the current trends in AI, it’s possible that robots will reach a stage of self-realization. When that happens, they may demand for their rights as if they were humans. That is, they’ll require housing and health care benefits and demand to be allowed to vote, serve in the military, and be granted citizenship. In return, governments would make them pay taxes. This is according to a joint study by the UK Office of Science and Innovation’s Horizon Scanning Center. This research was reported by the BBC in 2006, when AI was far less advanced, and it was conducted to speculate the technological advancements they might be seeing in 50 years’ time. Does this mean that machines will start demanding citizenship in about 40 years? Only time will tell.[2]

8 Automatic Killer Robots Are In Use

When we say “automatic killer robots,” we mean robots that can kill without the interference of humans. Drones don’t count because they are controlled by people. One of the automatic killer robots we’re talking about is the SGR-A1, a sentry gun jointly developed by Samsung Techwin (now called Hanwha Techwin) and Korea University. The SGR-A1 resembles a huge surveillance camera, except that it has a high-powered machine gun that can automatically lock onto and kill any target of interest. The SGR-A1 is already in use in Israel and South Korea, which has installed several units along its Demilitarized Zone (DMZ) with North Korea. South Korea denies activating the auto mode that allows the machine decide who to kill and who not to kill. Instead, the machine is in a semi-automatic mode, where it detects targets and requires the approval of a human operator to execute a kill.[3]

7 War Robots Can Switch Sides

In 2011, Iran captured a highly secretive RQ-170 Sentinel stealth drone from the United States military, intact. That last word is necessary because it means the drone was not shot down. Iran claims it forced the drone to land after spoofing its GPS signal and making it think it was in friendly territory. Some US experts claim this is not true, but then, the drone wasn’t shot down. So what happened? For all we know, Iran could be telling the truth. Drones, GPS, and robots are all based on computers, and as we all know, computers do get hacked. War robots would be no different if they ever make it to the battlefield. In fact, there is every possibility that the enemy army would make attempts to hack them and use them against the same army fielding them. Autonomous killer robots are not yet in widespread use, so we have never seen any hacked. However, imagine an army of robots suddenly switching allegiance on the battlefield and turning against their own masters. Or imagine North Korea hacking those SGR-A1 sentry guns at the DMZ and using them against South Korean soldiers.[4]

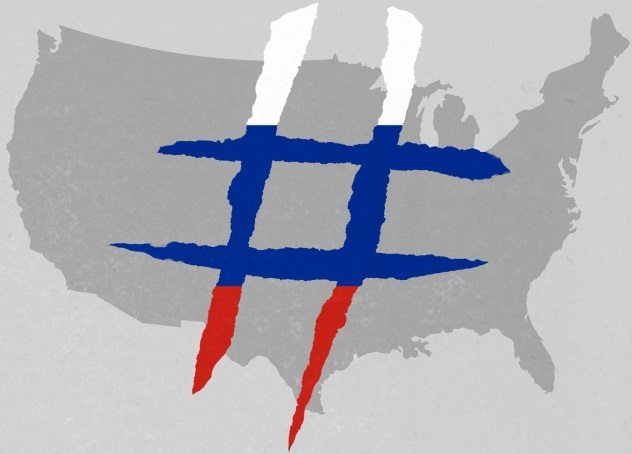

6 Russia Is Using Bots To Spread Propaganda On Twitter

Bots are taking over Twitter. Research by the University of Southern California and Indiana University has indicated that about 15 percent (48 million) of all Twitter accounts are operated by bots. Twitter insists that the figure is around 8.5 percent. To be clear, not all these bots are bad. Some are actually beneficial. For instance, there are bots that inform people of natural disasters. However, there are some that are being used for propaganda, most especially by Russia. Russia is still in the news for using these bots to sow discord among US voters and sway them toward voting for Donald Trump during the 2016 election. Another little-reported incident is Russia using these bots to sway UK voters into voting to leave the European Union during the 2016 Brexit referendum. Days before the referendum, more than 150,000 Russian bots, which had previously concentrated on tweets relating to the war in Ukraine and Russia’s annexation of Crimea, suddenly started churning out pro-Brexit tweets encouraging the UK to leave the EU. These bots sent about 45,000 pro-Brexit tweets within two days of the referendum, but the tweets fell to almost zero immediately after the referendum. What’s worse is that Russia also uses these same bots to get Twitter to ban journalists who expose its extensive use of bots for propaganda. Once Russia detects an article reporting the existence of the bots, it finds the author’s Twitter page and gets its bots to follow the author en masse until Twitter bans the author’s account on suspicion of being operated by a bot.[5] The worst is that Russia has seriously improved on its bot game. These days, it has moved from using full bots to using cyborgs—accounts that are jointly operated by humans and bots. This has made it more difficult for Twitter to detect and ban these accounts.

5 Machines Will Take Our Jobs

No doubt, machines will take over our jobs one day. However, what we don’t realize is when they will take over, and to what extent? Well, as we’re about to find out, it’s to a large extent. According to top consultancy and auditing firm PricewaterhouseCoopers (PwC), robots will take over 21 percent of the jobs in Japan, 30 percent of jobs in the United Kingdom, 35 percent of jobs in Germany, and 38 percent of jobs in the United States by the year 2030.[6] By the next century, they will have taken over more than half of the jobs available to humans. The most affected sector will be transportation and storage, where 56 percent of the workforce will be machines. This is followed by the manufacturing and retail sectors, where machines will take over 46 and 44 percent of all available jobs. Talking about “when,” it is speculated that machines will be driving trucks by 2027 and manning retail stores by 2031. By 2049, they’ll be writing books, and by 2053, they’ll be performing surgery. Only few professions will be free of the machine incursion. One is the role of a church minister, which would remain free not because a machine can’t run a church but because most people won’t approve of being preached to by a robot.

4 Robots Have Learned To Be Deceitful

In human-style fashion, robots are learning to be deceitful. In one experiment, researchers at the Georgia Institute of Technology in Atlanta developed an algorithm that allowed robots to decide whether or not to deceive other humans or robots. If the robots decided to take the route of deceit, the researchers included an algorithm to allow the robot decide how to deceive the people and robots while reducing the likelihood that the person or robot being deceived will ever find out. In the experiment, a robot was given some resources to guard. It frequently checked on the resources but started visiting false locations whenever it detected the presence of another robot in the area. This experiment was sponsored by the United States Office for Naval Research, which means it might have military applications. Robots guarding military supplies could change their patrol routes if they noticed they were being watched by enemy forces. In another experiment, this time at the Ecole Polytechnique Federale of Lausanne in Switzerland, scientists created 1,000 robots and divided them into ten groups. The robots were required to look for a “good resource” in a designated area, while they avoided hanging around a “bad resource.” Each robot had a blue light, which it flashed to attract other members of its group whenever it found the good resource. The best 200 robots were taken from this first experiment, and their algorithms were “crossbred” to create a new generation of robots. The robots improved on finding the good resource. However, this led to congestion as other robots crowded around the prize. In fact, things got so bad that the robot that found the resource was sometimes pushed away from its find. 500 generations later, the robots learned to keep their lights off whenever they found the good resource. This was to prevent congestion and the likelihood that they would be sent away if other members of the group joined them. At the same time, other robots evolved to find the lying robots by seeking areas where robots converged with their lights off, which is the exact opposite of what they were programmed to do.[7]

3 The AI Market Is Being Monopolized

The AI market is being monopolized. Bigger companies are buying smaller AI startups at an alarming rate. With the current trend, we would end up with AI that is controlled by a very small number of corporations. As of October 2016, reports indicated that companies like Apple, Facebook, Intel, Twitter, Samsung, and Google had purchased 140 artificial intelligence businesses over five years. In the first three months of 2017, big tech companies bought 34 AI startups.[8] Worse, they’re also paying huge bucks to hire the top scholars in the field of artificial intelligence. If this remains unchecked, you can guess where we’re heading.

2 AI Will Exceed Humans In Reasoning And Intelligence

Artificial intelligence is classified into two groups: strong and weak AI. The AI around us today are classified as weak AI. This includes supposedly advanced AIs like smart assistants and computers that have been defeating chess masters since 1987. The difference between strong and weak AI is the ability to reason and behave like a human brain. Weak AI generally do what they were programmed to do, irrespective of how sophisticated that task might seem to us. Strong AI, at the other end of the spectrum, has the consciousness and reasoning ability of humans. It is not limited by the scope of its programming and can decide what to do and what not to do without human input. Strong AI does not exist for now, but scientists predict they should be around in ten years’ time.[9]

1 AI Could Destroy Us

There are fears that the world might end up in an AI apocalypse, just as it happened in the Terminator film franchise. The warnings that AI might destroy us aren’t coming from some random scientist or conspiracy theorist but from eminent professionals like Stephen Hawking, Elon Musk, and Bill Gates. Bill Gates thinks AI will become too intelligent to remain under our control. Stephen Hawking shares the same opinion. He doesn’t think AI will suddenly go berserk overnight. Rather, he believes machines will destroy us by becoming too competent at what they do. Our conflict with AI will begin the moment their goals are no longer aligned with ours.[10] Elon Musk has compared the proliferation of AI to “summoning the demon.” He believes it is the biggest threat to humanity. To prevent the AI apocalypse, he has proposed that governments start regulating the development of AI before for-profit companies do “something very foolish.”